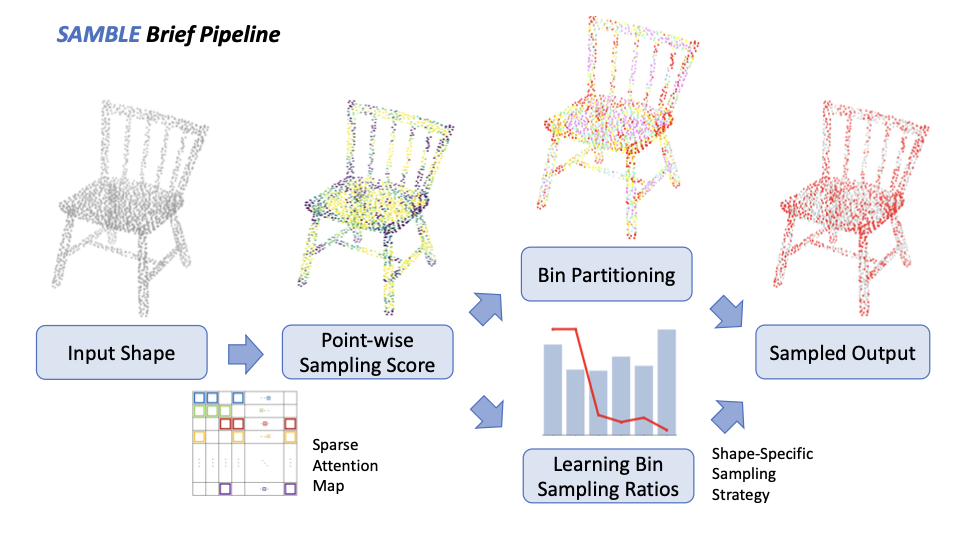

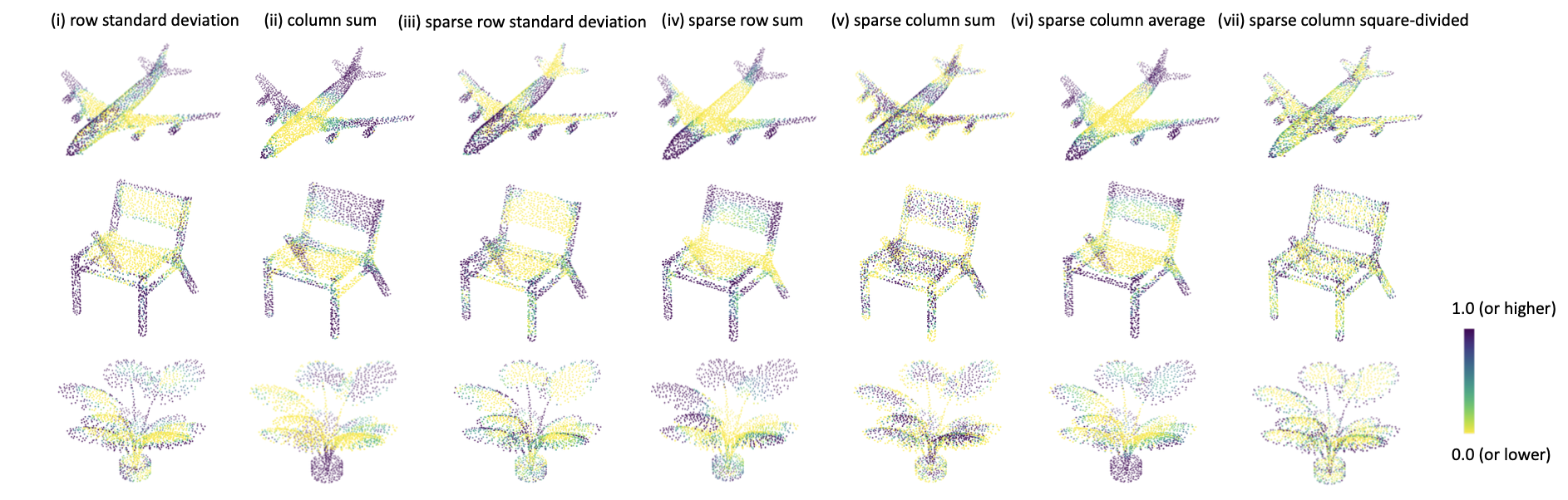

Fig. 1: A brief pipeline of our proposed method SAMBLE. It learns shape-specific sampling strategies for point cloud shapes.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

🔥 We have a seminal paper APES accepted by CVPR 2023 as Highlight!

Driven by the increasing demand for accurate and efficient representation of 3D data in various domains, point cloud sampling has emerged as a pivotal research topic in 3D computer vision. Recently, learning-to-sample methods have garnered growing interest from the community, particularly for their ability to be jointly trained with downstream tasks. However, previous learning-based sampling methods either lead to unrecognizable sampling patterns by generating a new point cloud or biased sampled results by focusing excessively on sharp edge details. Moreover, they all overlook the natural variations in point distribution across different shapes, applying a similar sampling strategy to all point clouds. In this paper, we propose a Sparse Attention Map and Bin-based Learning method (termed SAMBLE) to learn shape-specific sampling strategies for point cloud shapes. SAMBLE effectively achieves an improved balance between sampling edge points for local details and preserving uniformity in the global shape, resulting in superior performance across multiple common point cloud downstream tasks, even in scenarios with few-point sampling.

Fig. 1: A brief pipeline of our proposed method SAMBLE. It learns shape-specific sampling strategies for point cloud shapes. |

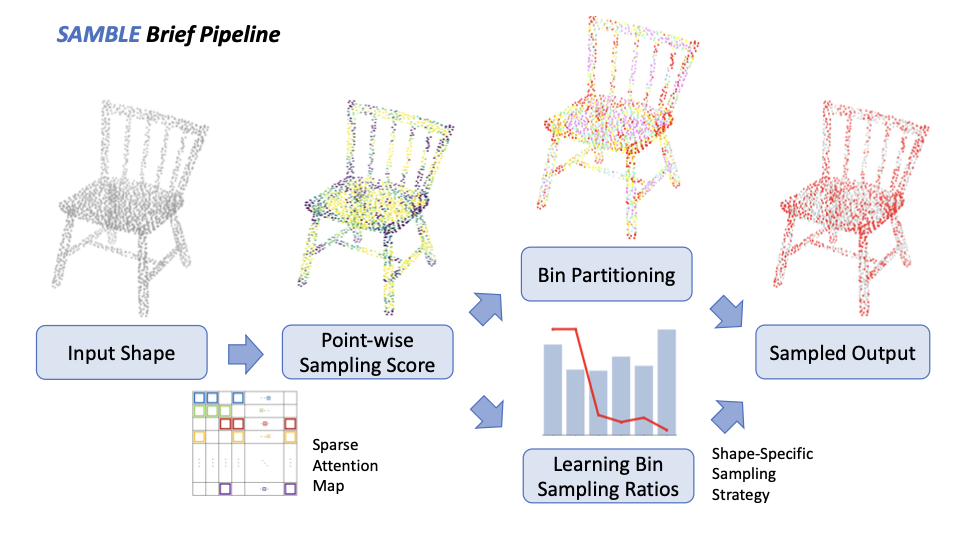

Fig. 2: Sparse attention map. In each row, k cells are selected based on the KNN neighbor indexes for each point. The values of other non-selected cells are all set to 0. Note that the number of cells selected within each column is variable. |

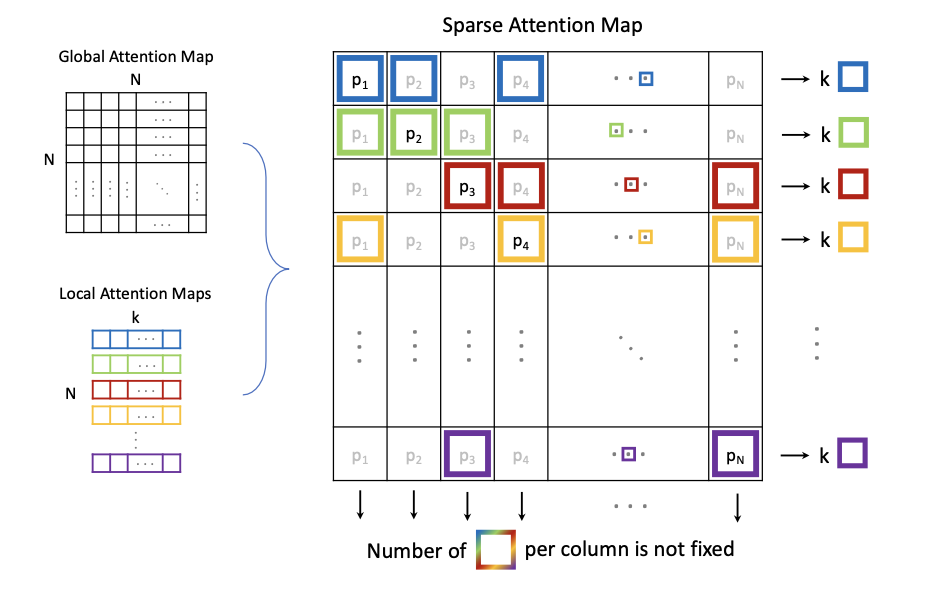

Tab. 1: Proposed different indexing modes for computing point-wise sampling scores. |

Fig. 3: Point sampling score heatmaps under different indexing modes. Scores are normalized to N (0.5, 1) for better visualization. |

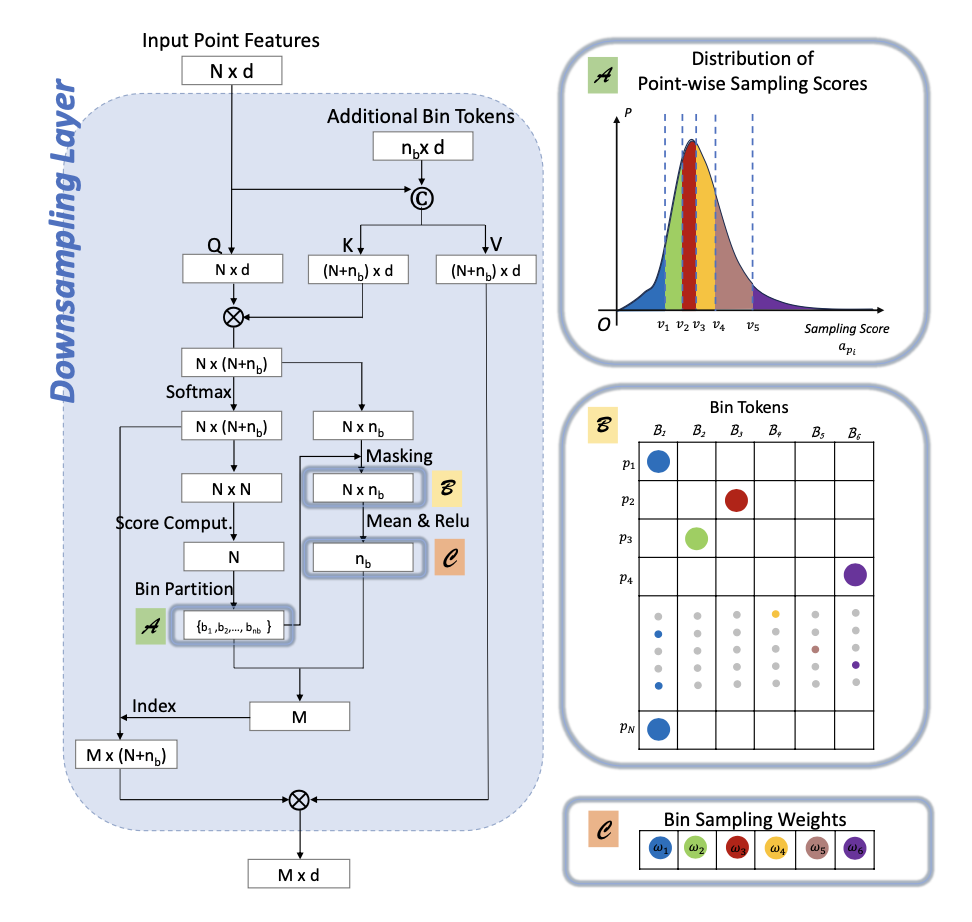

Fig. 4: Network structure of our proposed downsampling layer. Block A: Points in each shape are partitioned into nb bins. Block B: Masking the split-out point-to-token sub-attention map. Block C: Learned bin sampling weights. |

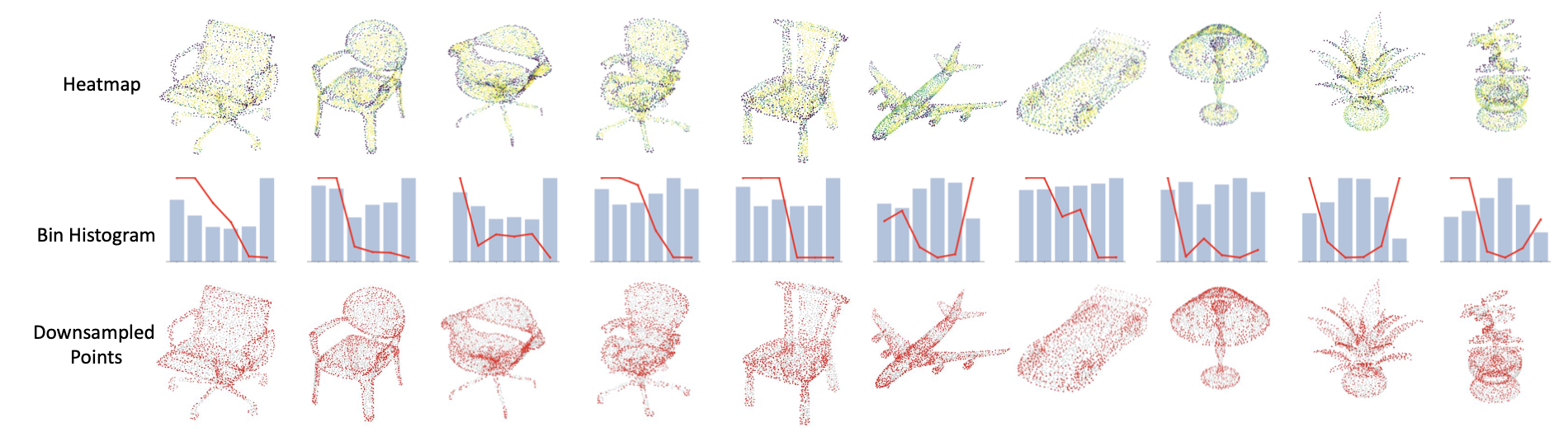

Fig. 5: Qualitative results of our proposed SAMBLE. Apart from the sampled results, sampling score heatmaps and bin histograms along with bin sampling ratios are also given. All shapes are from the test set. Zoom in for optimal visual clarity. |

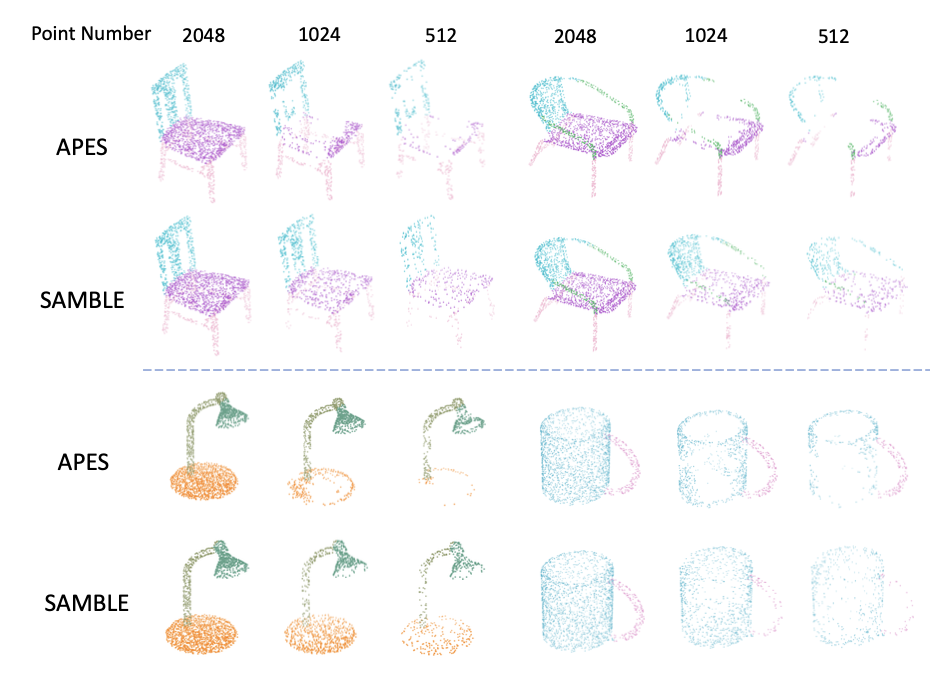

Fig. 6: Segmentation results of our proposed SAMBLE in comparison with APES. All shapes are from the test set. |

|

@inproceedings{wu2023attention,

title={Attention-Based Point Cloud Edge Sampling},

author={Wu, Chengzhi and Zheng, Junwei and Pfrommer, Julius and Beyerer, J\"urgen},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2023}

}

@inproceedings{wu2025samble,

author={Wu, Chengzhi and Wan, Yuxin and Fu, Hao and Pfrommer, Julius and Zhong, Zeyun and Zheng, Junwei and Zhang, Jiaming and Beyerer, J\"urgen},

title={SAMBLE: Shape-Specific Point Cloud Sampling for an Optimal Trade-Off Between Local Detail and Global Uniformity},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2025}

}

|